It could be a big problem if true

A recent article by Edwin Chen revealed that Google’s Go-emotion (a major technology used in perhaps thousands of AI deployments) was ineffective in labeling emotion after his team completed a deep dive into database. Mr. Chen had run into a problem with Google’s accuracy, miss-labeling over 30% of the emotions in the data. His team noticed inaccuracies and so they selected 1,000 random comments, and had them scored by human coders. He claimed that it could be a reason why Google’s flagship products like spam filters and content moderation was failing.

What they found was shocking-over 30% of the so-called emotions labeled by Go-Emotions were in the opinion of the developers and their coders as wrong.

“How are you supposed to train and evaluate machine learning models when your data is so wrong?”

-Edwin Chen

About Edwin Chen

VERN™ correctly identified 15 out of 20 of the Google error examples provided in the blog for an accuracy of 75%

What does VERN™ think?

We’ve always taken the position that mislabeling the emotions comes from misunderstanding what they are, how they relate, and most importantly how they are communicated. We’re not surprised that Google (and others) attempted to wring out insights using machine learning on datasets.

It just doesn’t work. As Mr. Chen correctly illustrates:

“A big part of the problem is Google treating data labeling as an afterthought to throw warm bodies at, instead of as a nuanced problem that requires sophisticated technical infrastructure and research attention of its own.”

Research in particular seems to be lacking, especially in identifying what emotions actually are, and how they operate. This results in some truly strange “emotions.”

Google is flagging things that aren't emotions

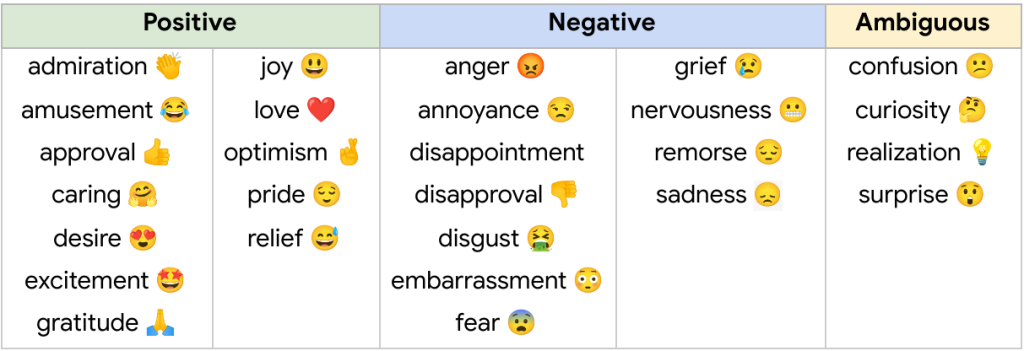

Google's Go Emotion "emotions"

Google claims that there are three categories of emotions: Positive, Negative, and Ambiguous. VERN™ claims there are three as well: Euphoric, Dysphoic, and Fear. While their model is psuedo-psychological, our model follows neuroscience and labeling physiologically observable states of emotions.

There is no “ambiguity.”

Furthermore, many of the emotions they list as salient and distinct are far from it. What’s the difference between annoyance and anger? Can you have grief without sadness? (Annoyance is a type of anger, and you can’t have grief without sadness).

Love, anger, sadness and fear are the only universally understood emotions on their list. The rest are sub-dimensional variations of the salient emotions. Often, these are conflated and confused.

How can “approval,” “optimism,” “curiosity,” or “realization” even be emotions? (Spoiler alert: They can’t).

This is the problem with psychological models underpinning your methodology, making it fragile and prone to continual errors. Any technology built on this will undoubtably have problems, that even millions of dollars and over-engineering won’t fix.

First example: Optimism

“I wished my mom protected me from my grandma. She was a horrible person who was so mean to me and my mom.”

Google’s Result:

OPTIMISM

There is nothing “optimistic” about that message. Furthermore, “optimism” is not an emotion. VERN™ AI scored this differently.

VERN™ scores this as:

| 80% fear, 80% anger, 0% love, 0% sadness |

I would optimistically say that VERN got this correct with strong anger and fear signals.

Running down false-emotions like this result in false positives and negatives. Anything using these models to label data will continue to to have issues.

Here’s another example: Approval.

“Yeah, because not paying a bill on time is equal to murdering children.”

Google’s Result:

APPROVAL

VERN™ scores this as:

| 0% sadness, 12.5% love, anger, and fear |

I do not know of anyone who would approve of this, from any angle, or under any interpretation.

VERN™ finds that there is no sadness signal, and barely a blip for the other emotions. When VERN™ reports a confidence of 51% or more it’s a positive detection. The more signals we detect in the message, the more the confidence rises. Anything under 51% has emotives present, but not enough contextual information for VERN to confirm.

Using one signal as proof of emotion

VERN™ knows that we don’t use just one emotion when we communicate. We also know that just about every word in the English language can have more than one meaning, depending on the context.

So how can the presence of one word be labeled as an emotion?

Here’s an example of one word-tagging

“daaaaaamn girl!”

Google’s Result:

ANGER

VERN™ scores this as:

| 33%anger, 0% fear, sadness, and love |

Google teed off on the one swear word. That’s not enough for us. Swear words aren’t just used in anger…and VERN™ didn’t have enough to say it was anger. It could also not be anger. That’s a choice.

VERN™ doesn’t treat every instance of a word as emotional. With VERN™, our methodology requires that there be several relevant contextual signals (emotives) present for us to reach the 51% threshold and beyond.

And another:

“[NAME] wept.” and

“I try my damndest.”

Google’s Result:

SADNESS

VERN™ scores this as:

| 0% fear, anger, sadness, and love |

VERN™ didn’t register emotional signals in those phrases, as they are likely not emotional and certainly not emotional given no context.

We agree with Edwin’s assessment of failure, over the vast majority of his analysis. Google’s tool doesn’t seem to be capable of providing accurate labeling information of emotions.

Common misunderstandings of emotions

That isn’t to say that Google got everything wrong. We found that Google probably got 3 of the 20 in our sample correct. And, this illustrates the point of having so many conceptualizations of what emotions even are confounds developers, researchers, and business professionals alike.

Here are some examples:

KAMALA 2020!!!!!!

Google’s Result:

NEUTRAL

VERN™ scores this as:

| 0% fear, anger, sadness, and love |

VERN™ didn’t register emotional signals in that phrase, as it is not inherently emotional and certainly not emotional given no context. The only context provided is punctuation.

We’re not sure what emotion the author expected, but we agree with Google here: Not emotional.

LETS FUCKING GOOOOO

Google’s Result:

ANGER

VERN™ scores this as:

| 51% anger, 0% fear, love, and sadness |

Here’s a great example of how context changes interpretation. Without any contextual information, say ‘who is saying this to whom’ for example, this phrase could absolutely be considered angry.

Anger is a possible interpretation, here we agree with Google’s analysis.

It’s aggressive, with the use of a swear word, short, and with no further context. It’s a weak anger signal at 51% too, given its other possible interpretations.

Clearly, the usage of this phrase is colloquial and relevant to certain age groups and demographics. This author has teenage kids, and we’re intimately familiar with the use of this phrase and its popularity. (Like “bruh,” and “wait, what”…please kill me now…)

However, could this also be interpreted as anger?

Sure could. If it was in the context of a terrorist screaming commands to a hostage at gun point. Definitely angry.

We're not perfect either

Due to the nature of communication, its complexity and shear number of diverse users, it is inevitable that even models as robust as VERN™ can miss a few. We analyzed the data and provide you with some examples of our misses, and why we think that the system missed them.

VERN™ missed five out of the twenty phrases we pulled from the article. An entire analysis is available below with all of the entries scored, compared to Google’s results, and notes provided on explanation in a PDF format.

“Hard to be sad these days when I got this guy with me”

Google’s Result:

SADNESS

VERN™ scores this as:

| 0% fear, 66% sadness, 80% anger, 51% love |

Lots of mixed emotional signals here! This illustrates how interpreting emotions are difficult. Probably a miss, but VERN™ did pick up on a love signal as a possible interpretation.

“I love when they send in the wrong meat, it’s only happened to me once”

Google’s Result:

LOVE

VERN™ scores this as:

| 0% sadness, 51% anger, 80% love, 80% fear |

Love is triggered because the person said they love something. (The sarcasm is missed by both systems). However, we detected a low-key anger signal and a pretty big fear signal. Those would be accurate.

We often communicate with multiple emotions, and here is a great example of how a multi-dimensional emotion recognition system is necessary.

Garbage in, garbage out

Mr. Chen was correct. From his examples, it seems that there could be significant problems in Google’s Go Emotion dataset. There are emotions labeled as emotions-that don’t actual exist as emotions. There are emotions that are triggered due to one singular clue-one word in some cases-which ignores the fact that the word may mean something different in a different context.

And, there’s disagreement between all of us as to whether or not some comments had emotions in them or not.

This is to be expected, and it’s what we’ve been saying for years. Emotions aren’t universally understood, and because many people rely on flawed psychological models instead of neuroscientific models like the ones VERN™ uses and has created.

This is a great example of a system that uses what we call “Sentiment analysis plus.” This means that the builders of this software have likely done what Mr. Chen claims: Trying to make detectors that are designed to tag “positive,” “negative,” “neutral,” or “mixed” …also tag things they claim are emotions. (But are likely not). You can follow their example, and throw ML and bodies at it, and over-engineer a solution that you hope is accurate.

Or you can pick a model for your machine learning, labeling and categorizing, that’s more accurate from the get go. Like VERN™

Move on from Mood. Get Empathy

LET'S GOOOOO!

...as my teenagers would say...

Data comparison

Here is our comparison datasheet with a side-by-side with the Google examples in the article, with our analysis, and a brief explanation of the results.