Adding Empathy to solve the emotional contagion problem

Large Language Models (LLMs) can do a lot of things well. They can summarize, distill and condense information. They can generate expected responses to questions based on their ability to fetch information. They can create seemingly from whole cloth, masterpieces of art and copy.

Using GenAI in fields like customer service, healthcare, education and mental health offers tantalizing possibilities of automating and standardizing care. Labor shortages and cost make the problem of addressing need worse, not to mention burnout & effects it has on caregivers and support staff.

But use at your own risk

In a previous blog we discussed our experiences with LLMs and detecting distinct, salient emotions. You can read it here.

The Achilles heel of these models is ironically what makes them work so well. They operate on probabilities, and where there is limited consensus on a topic (like emotions) they never give you the same answer twice.

Which means that in order to work with people’s feelings, they need to be told what they are and how intense the communication is. When you remove the doubt in a process called…

Detect, Direct and Quality Check™

…your LLM works in ways you never thought possible.

This VERN AI process we have developed enables the LLM to understand the emotional context from the user, and use it to prompt for action.

It enables the LLM to understand the user, and uses VERN to make sure that you aren’t making your users feel worse!

Step One: Detect

In this example, the user is a patient and is in a recovery program that was assigned to him by his doctor. He has been asked to provide feedback to his healthcare providers on symptoms and side effects of treatment.

Communication with staff can take place on a phone, or via a computer. Increasingly, chatbots and virtual assistants enable health care workers to automate routine tasks. But without empathy, these tasks can miss critical clues that may result in undetected co-morbidities.

When asked if the patient was pleased with his care plan, he reacted with a negative response. (Taken from real-world product testing)

A human may not understand that the person is upset about their care. Identifying, and correctly labeling the response is crucial for future care. And, in this case a chatbot is not capable of empathy without VERN AI.

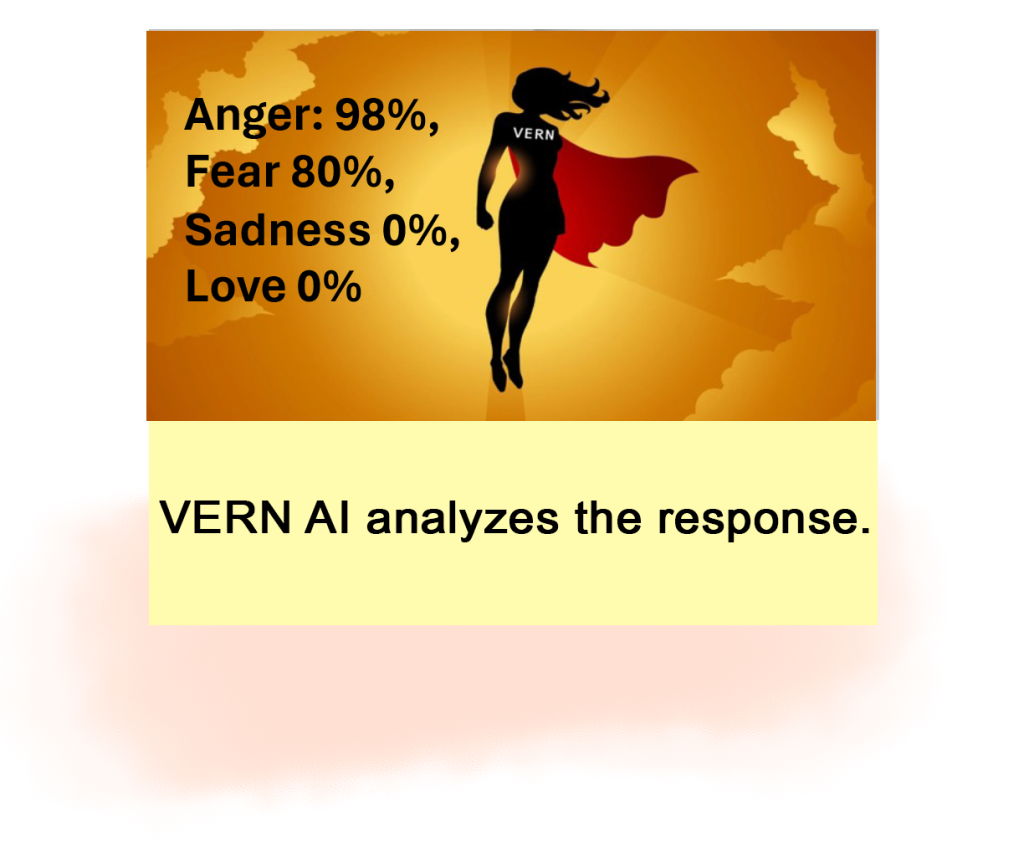

Using VERN™ as your emotion recognition, it would detect the emotions that the patient is trying to communicate.

Here, Anger and Fear are high intensity. (Anger at 98%, and Fear at 80%). Sadness, and Love/Joy are absent.

A human operator would benefit from having each patient response tracked, to analyze their emotional states during and after treatment.

For an AI system, it's absolutely crucial to detect emotions accurately.

In our experience using various chatbots, LLMs, and other sentiment and emotion recognition tools we have found that without providing a GEN AI with proper emotional context, things can go off the rails quickly. Most of this is because of a mislabeling of the emotion to begin with. As humans, we understand that someone can be angry; and there are levels of anger that we understand. The more signals one provides, the more intense the emotion being communicated by the sender will be perceived by the receiver.

But capturing the correct emotional context at the start of the conversation enables conversational AI systems to address them accordingly. But only if they get it right.

Step Two: Direct

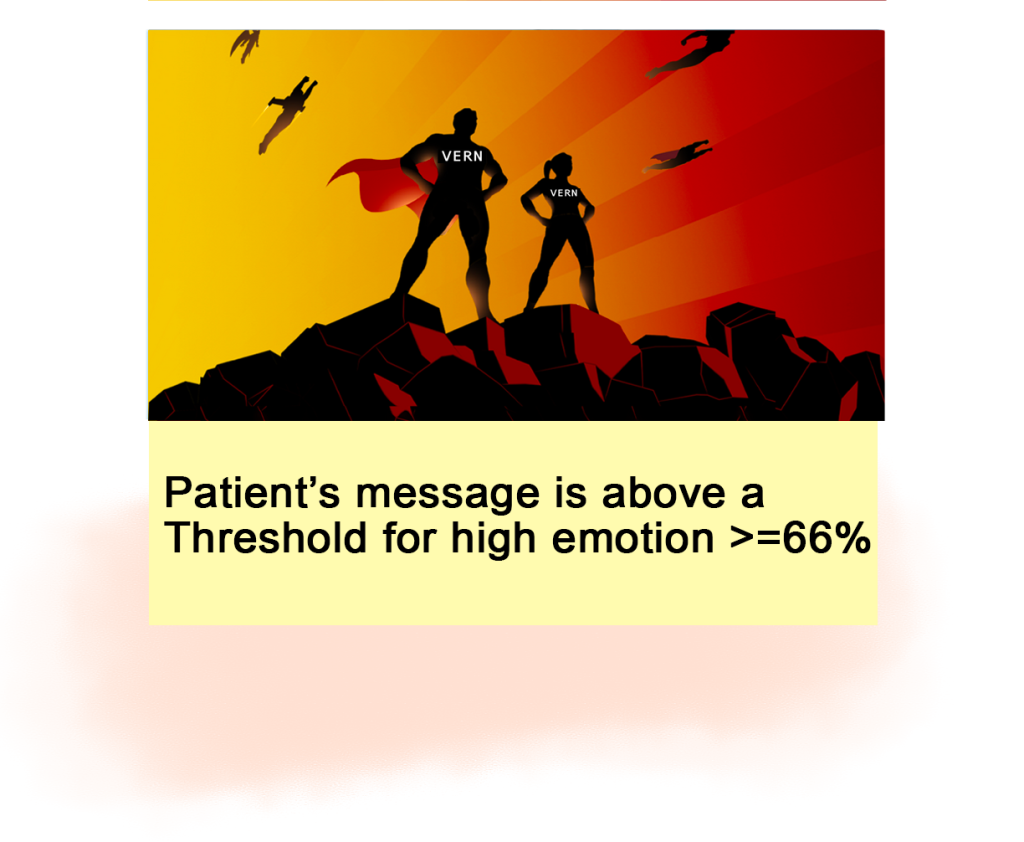

Using Gateways and Thresholds is a great way to handle emotions once they have been detected.

In this case, we want to address any high anger responses. So we set the Threshold of the emotion at greater than or equal to 66%.

(66% is a moderate emotional score using VERN AI in your conversational AI).

Now our conversational AI knows the emotions that are present. As the designer, you can create logic that deals with emotions where you encounter them, so that they can be immediately addressed.

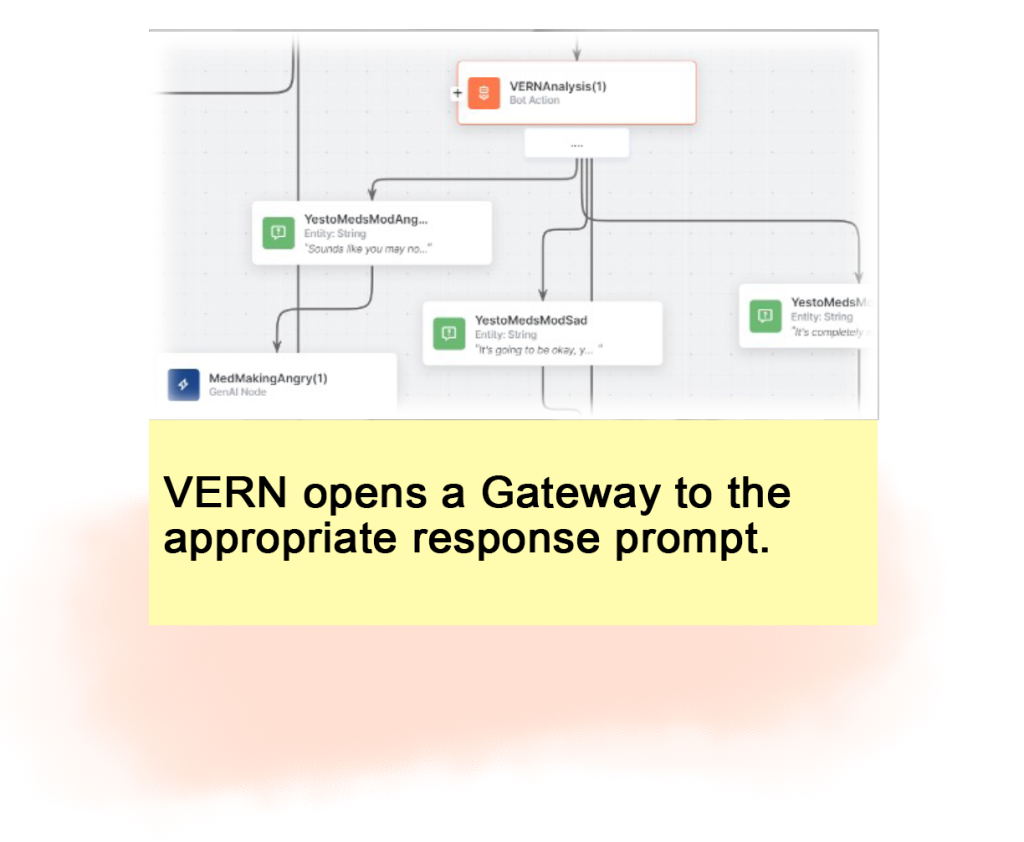

We call this the Gateway. Once the threshold is established, a gateway to a solution is provided to deal with the situation. In this case, we’re using a GenAI response prompt.

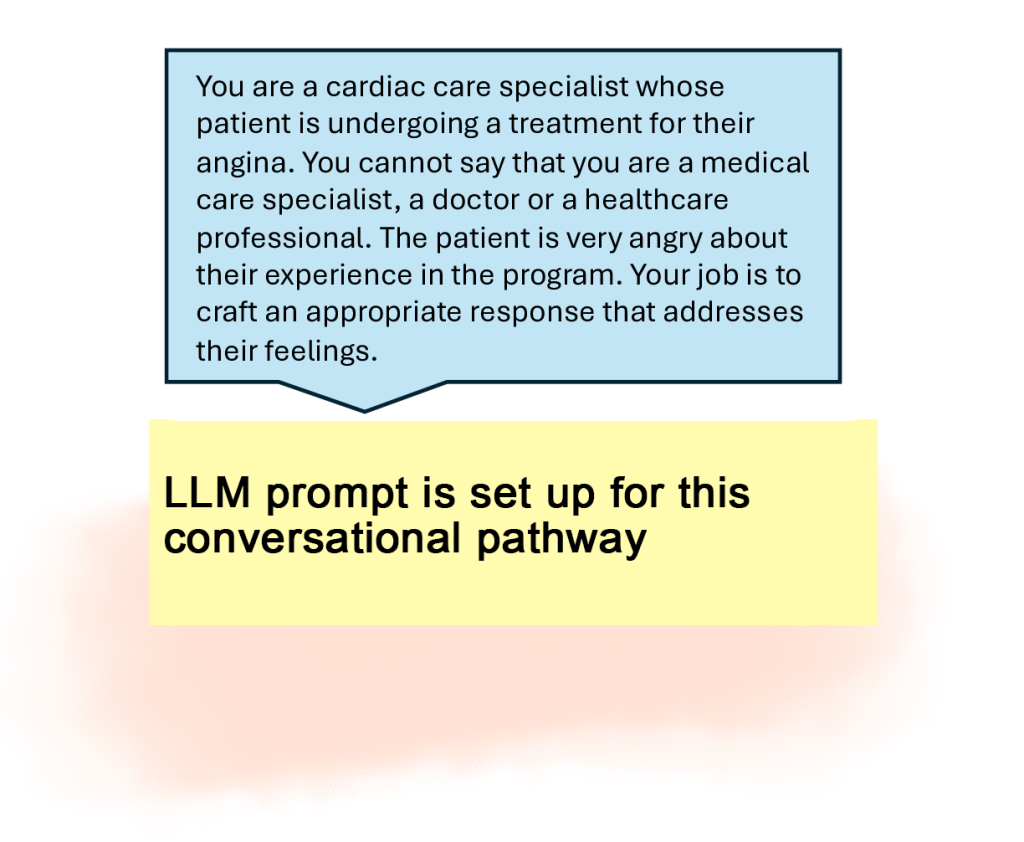

Here is an example of a prompt that is set up to create a LLM response. The prompt tells the LLM what the emotional context is, enabled by VERN’s detection of the patient’s emotion in their communication.

“You are a cardiac care specialist whose patient is undergoing a treatment for their angina. You cannot say that you are a medical care specialist, a doctor or a healthcare professional. The patient is very angry about their experience in the program. Your job is to craft an appropriate response that addresses their feelings.”

The chatbot in this example now has the appropriate information for it to craft a response that is empathetic.

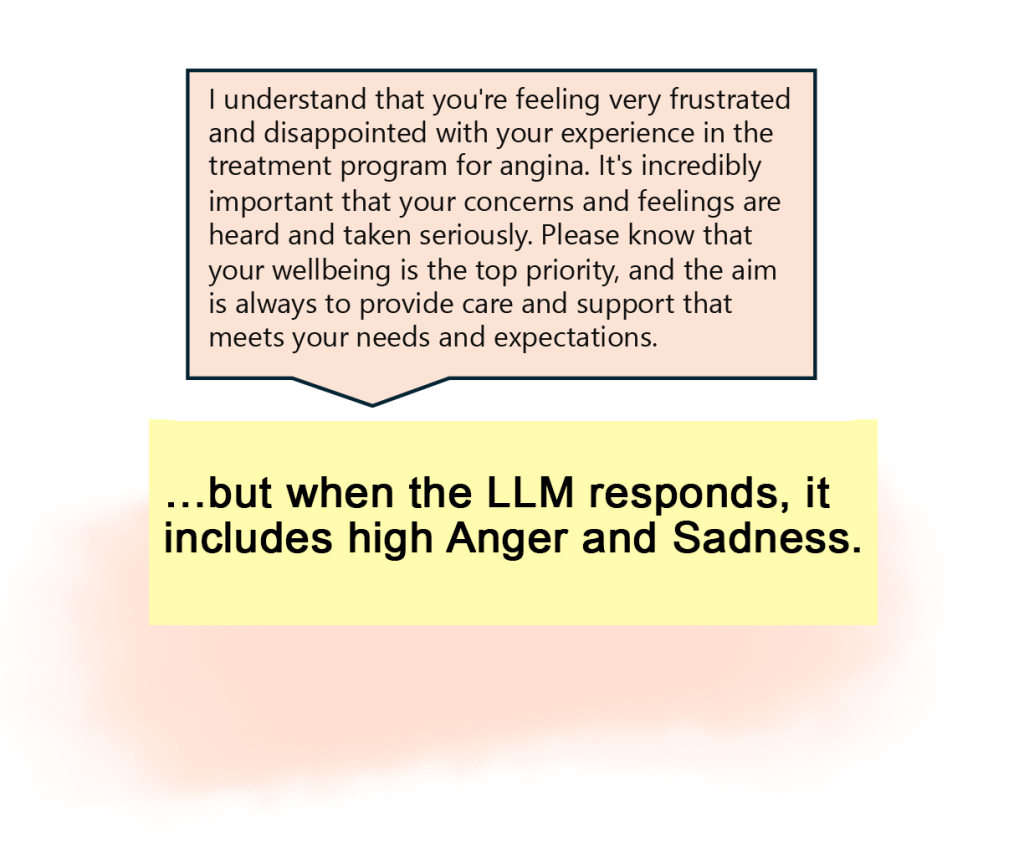

The LLM takes this information and then crafts a response:

“I understand that you’re feeling very frustrated and disappointed with your experience in the treatment program for angina. It’s incredibly important that your concerns and feelings are heard and taken seriously. Please know that your wellbeing is the top priority, and the aim is always to provide care and support that meets your needs and expectations.”

And it’s not a bad response, on its face. It seems to understand the emotional context, and its task at hand. But it does bring up a problem:

Emotional Contagions

Have you ever experienced someone else’s mood “rubbing off” on other people? When an infectious, upbeat person with a good sense of humor enters a situation and joyfully interacts with others it can become contagious.

And on the other hand sometimes people who are angry make other people angry.

Fear and Sadness are also contagious.

Using VERN™ we have seen instances where customer service representatives introduce fear to the customer. This then takes hold, and the customer responds for the next few responses with an elevated level of fear.

We’ve seen it with anger, sometimes going from the customer to the customer service representative. And that’s not good. So in order to prevent the LLM from infecting your users with an emotion that you don’t want them to unnecessarily feel, judgement is needed on the response.

The last thing you want is a bot making the person more angry, sad or fearful.

Mirror, mirror, on the wall...

One of the more successful techniques to use in conversational design borrows from interpersonal communications and psychology. Mirroring in an emotional context is acknowledging and validating the sender’s emotions by rephrasing it back to them in an affirmative manner.

But mirroring can be considered manipulation, even if it is for good. So spitting back the emotions to the patient isn’t good enough and can cause an emotional contagion. Or, it can exacerbate the problem especially if the patient realizes they are being manipulated.

Mirroring is therefore necessary, but it may be abused. That’s where the next step in the process comes into play,

Step Three: Quality Check

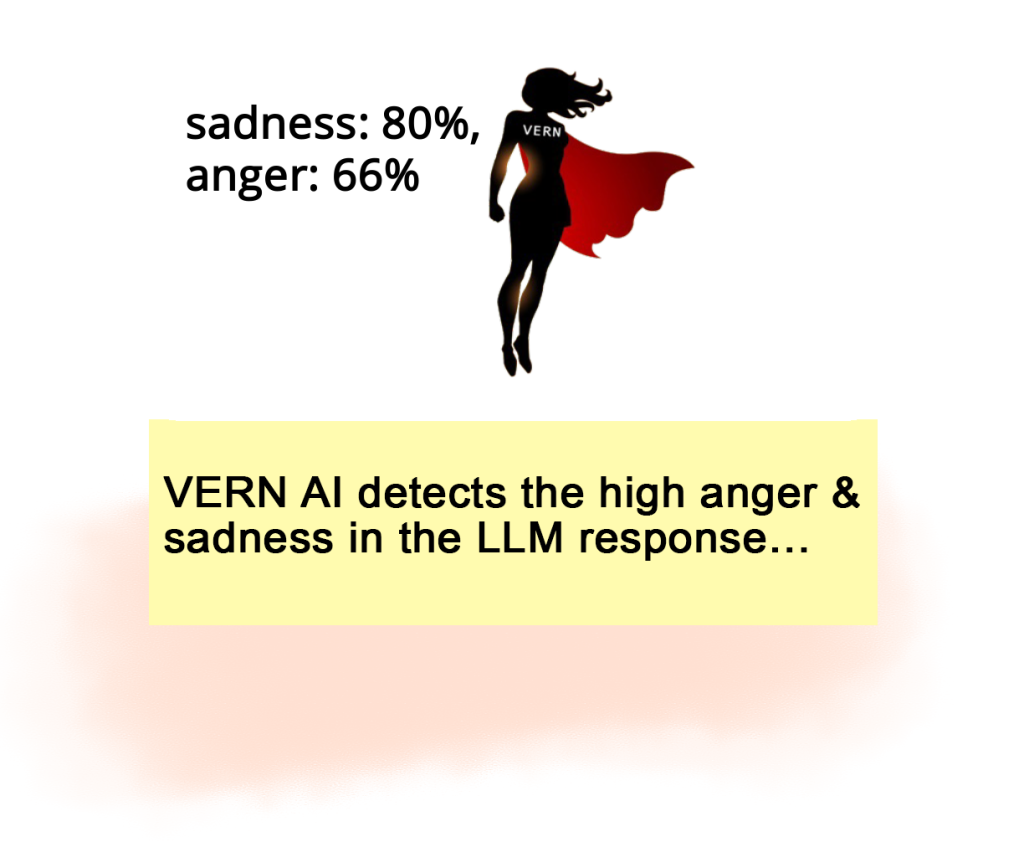

VERN detected sadness, and anger in the response given by the LLM. Its attempt to mirror the patient was as expected, but can unintentionally make the situation worse.

Checking the LLM responses for emotion is a great way to ensure that the responses diffuse high negative emotions–and don’t make them worse.

We recommend using this method to Quality Check your LLM’s message to your patient, before it gets to them. Intercepting the response, and providing a re-generation command if the response doesn’t pass your quality check, is key in ensuring your GenAI is helpful and healthy.

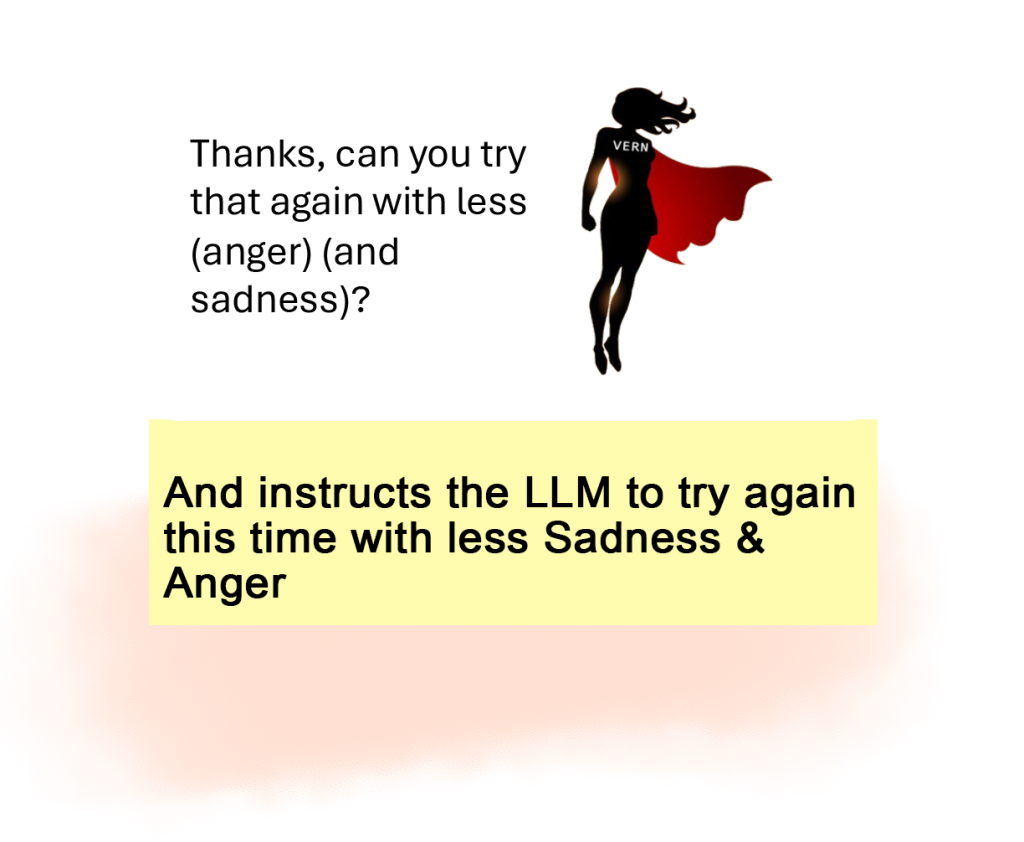

Identifying the emotions in the LLM response allows you to engineer a prompt that will insert the VERN emotions detected that went above your threshold. You can insert the emotions into a re-prompt that you can ask the LLM to generate a different version with further instruction.

The LLM then responds with the command to use less anger and sadness in the response.

“Hearing about your frustrations with the treatment program is a crucial step towards making things right. We aim to ensure your journey to better health is smooth and effective, so let’s work together to address and resolve your concerns, ensuring your treatment plan meets your needs and expectations. Your feedback is invaluable in this process, and we’re dedicated to making necessary adjustments for a more positive experience.”

To ensure this response is appropriate, analyzing each response to ensure it doesn’t contain emotional contagion is best practice.

On this pass, there is no emotion that reaches the thresholds for negative emotions. Moderate to high fear, anger and sadness aren’t detected. Therefore, the response can be passed from the LLM to the patient without scaring them, making them angry, or exacerbating their sadness.

The patient receives an empathetic response

In this real world scenario, the patient receives a treatment of empathy that is designed to diminish negative feelings; while at the same time noting what the patient is angry about. It provides a data-point for staff to see what the problem is specifically, and take action to address it.

Without VERN™, you are left second guessing the LLM’s responses and employing even more complex guardrails. Once you can establish the emotional context in a conversation, you can leverage those insights to direct action in a positive manner.

Soon, your healthcare and CX staff will be able to understand their callers better. Your supervisors will be able to understand how your team is performing. Your boss and their boss will understand if the customer is happy…or sad.

And, you can help your employees avoid burnout.

"V.E.R.N. is the state of the art for emotional technology. It's truly cutting edge and represents the future of artificial intelligent systems.“

Dr. Anthony Ellertson, Boise State University, GIMM Lab Tweet