Last week we discussed the VERN emotion recognition model. We talked about how the world doesn’t have a clear idea of how many emotions there are, what they are, and how the heck they can even be detected. We provided some clarity on our model and how it relates to finding emotion in communication.

Last week we discussed the VERN emotion recognition model. We talked about how the world doesn’t have a clear idea of how many emotions there are, what they are, and how the heck they can even be detected. We provided some clarity on our model and how it relates to finding emotion in communication.

Today we’re taking a look at what methodologies VERN applies, to get the results that our clients see.

Our Methodology, briefly

To construct our model and test it we used tens of thousands of tweets including those from CSPAN’s congressional twitter list; 800+ social media responses to a viral Facebook post; hundreds of sources of local and national advertising copy; hundreds of lines randomly selected from technical manuals, terms of service, warranty information; and news stories from the Associated Press, Reuters, and others. We also included hundreds of randomly selected excerpts from works of literature taken from the “List of novels considered the greatest” on Wikipedia. Countless more random social media posts from Facebook, Twitter, Tumblr, reddit and more were added. We randomly selected as many different types of communication as possible.

We read, and discussed hundreds of academic papers in neuroscience, communication, interpersonal communications, psychology, and linguistics. These theories were used to create and test identifiable emotional clues and compared to our model. (We considered Shannon & Weaver, works from Lazarus, Panalp, Nabi, and Rolls; Ellison, Lampe; Walters, Rogers, Bondi, Attardo and Raskin; and so many more. Some were dead ends. Some were quite informative.)

We call the emotional clues we found emotives, and they make up what we consider emotions.

What each of the emotives are exactly, is unfortunately proprietary. How they interact, and what combinations and other signals they produce, we will not be divulging. It’s the result of decades of study and experimentation, and we reserve the rights to maybe one day pay back our student loans. However, we will happily provide you with some information as to what some of the signals we detect are, because you would expect certain signals to be present in certain emotions. You are human, and somewhat of an expert in human emotions after all.

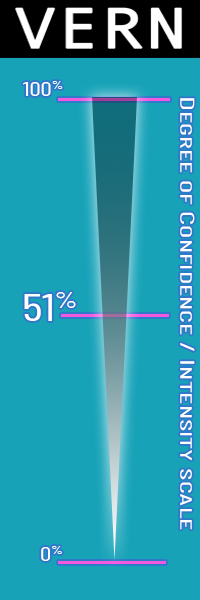

We found that in each case, our emotions (as we’ve constructed them) have around 20 sub dimensional signals. When we detect a certain number of them in a sentence, it triggers a threshold and raises the confidence level that the emotion is present. At 51{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02}, it’s more likely than not that the sentence satisfies the criteria for an emotion present as a standard receiver may interpret it. This interpretation holds up across the personal frame, and is shared by others who share the frame of reference. (Meaning, it’s salient to the individual and a closely related group). And, the more signals that are present, the higher the confidence level of the detector. At 98{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02}, VERN is very confident in the emotion being present and also confident in its intensity. The closer it gets to 100{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02}, the closer it comes to the “core” of understanding–meaning what most people would interpret as that emotion.

We found that in each case, our emotions (as we’ve constructed them) have around 20 sub dimensional signals. When we detect a certain number of them in a sentence, it triggers a threshold and raises the confidence level that the emotion is present. At 51{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02}, it’s more likely than not that the sentence satisfies the criteria for an emotion present as a standard receiver may interpret it. This interpretation holds up across the personal frame, and is shared by others who share the frame of reference. (Meaning, it’s salient to the individual and a closely related group). And, the more signals that are present, the higher the confidence level of the detector. At 98{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02}, VERN is very confident in the emotion being present and also confident in its intensity. The closer it gets to 100{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02}, the closer it comes to the “core” of understanding–meaning what most people would interpret as that emotion.

For example

“Fine…whatever,” rates at 51{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02} Anger on VERN’s scale. It’s a much different story when we analyze “You better back off or I’ll blow your head off!” That sentence rates at a 90{b1ba36726a3bfcdc42af6e5ec24af305dbc6425c95dfb7052d7f2b4aabbf1a02} Anger confidence.

Many of the signals (emotives) are not always used as emotional vehicles in communication. We have engineered VERN in such a way, that it doesn’t merely stamp everything with an emotive as soon as it sees one. There must be some other conditions in place for an emotion to cross the threshold of confidence. Otherwise, sources like news copy would classify as emotional more frequently. (Predictably, there is no emotion in technical writing).

Luckily, it’s not all exotic methodology that we can’t share because it’s proprietary. In our sadness detection, for example, we do consider indicators of clinical depression. This very well known method has been shown to be effective in clinical psychology; and is a minor referential signal in our model. In anger, we do track violent language which has been shown to be statistically significant indicators of aggression and anger. In humor, we track insults and swear words. Each emotion is dependent on different emotives, or contextual clues and frames of reference that are parts of how we communicate emotional content. Some emotions share emotives, which is kind of spooky.

What could they be telling us?

(We’ll tell you in our next blog coming soon).