A recent article in VentureBeat claimed that “ontology is the real guardrail” for controlling AI systems and preventing agents from misunderstanding their tasks. The argument is tidy, appealing, and technically useful in narrow enterprise applications.

It is also incomplete — and in many use cases, flatly incorrect.

Ontology can help coordinate business data. It cannot keep a human-facing AI system stable, emotionally coherent, or safely grounded across the full range of conversational contexts that people naturally bring into an interaction.

We know this because our AI Humans do it every day — without needing an ontology to dictate what they can or cannot understand.

At VERN AI and Everfriends, we have built AI systems that are flexible, emotionally intelligent, highly adaptable, and resistant to derailment. They can discuss nuclear physics, pop culture, childhood memories, grief, retirement planning, or last night’s baseball game — even when the avatar was not designed for any of those contexts.

Echo, one of our AI Humans created for HelloMyFriend, has competently discussed advanced scientific topics on camera. She can also pivot back into elder companionship without losing tone, focus, or stability.

Our systems do not silo knowledge.

They do not require rigid ontologies.

And they do not “break” simply because the topic shifts.

Why? Because ontology is not the guardrail. Emotional and conversational intelligence are.

The Limits of Ontology as a Control Mechanism

Ontology-based AI is built around a formal structure: a hierarchy of concepts, relationships, definitions, and constraints. Rao is right that this can help solve certain problems:

enterprise data alignment

resolving conflicting business definitions

ensuring consistency across structured systems

traceable reasoning over narrow domains

When the task is:

“Make sure that ‘customer’ means the same thing across five different databases,”

ontology is extremely useful.

But when the task becomes:

“Engage a human in an unscripted conversation across open-domain topics, detect their emotional state, maintain psychological safety, and adapt to whatever they say next,”…ontology collapses immediately.Dattaraj Rao

- Human conversation does not follow ontological boundaries.

- Emotion does not follow a schema.

- Context is fluid, emergent, and constantly shifting.

Ontology was built for systems.

Humans are not systems.

LLMs Already Handle Open Domains — Ontology Will Only Slow Them Down

Modern large language models can already operate across massive, open-ended knowledge spaces. They do not need an ontology to stay within a domain because they are not limited to domains in the first place. Their reasoning ability is not based on structured relational maps — it is based on learned representations across billions of examples.

But this strength is also the challenge:

Without an external guidance system, LLMs drift.

They lose conversational grounding not because they lack an ontology, but because:

they cannot detect emotion

they cannot measure emotional intensity

they cannot track emotional change over time

they cannot regulate their own tone

they cannot maintain conversational stability across long or sensitive dialogues

they have no internal mechanism for “when to stop” or “what not to say”

Ontology addresses none of these failures.

It can constrain knowledge.

It cannot constrain behavior.

What Actually Keeps AI Humans Stable: Emotional Intelligence + Compact Internal Logic

VERN AI’s architecture is fundamentally different.

Instead of using ontology to force the AI into a narrow conceptual box, we provide a compact, internal, emotionally intelligent control layer that does four things ontology cannot:

Detects Real Emotion, Not Guessed Emotion

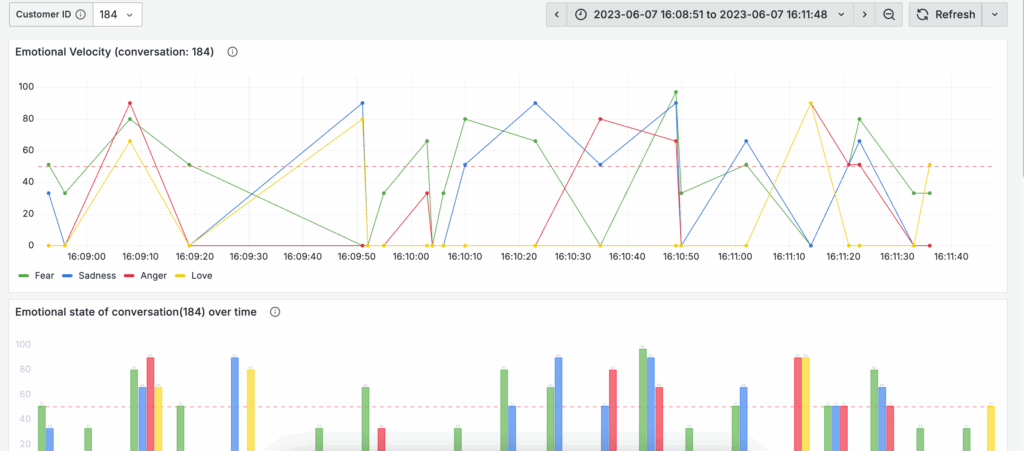

VERN AI annotates every user utterance with:

a primary emotion

a 0–100 intensity score

significance thresholds

emotional velocity (how feelings rise, fall, or escalate)

This allows the AI to actually understand how the user feels — not predict, not approximate, not infer.

Ontology cannot do this.

LLMs alone cannot do this.

VERN does.

Maintains Conversational Stability Across Domains

Because our emotional and conversational engine sits outside the LLM, we can keep avatars aligned even when the content of conversation jumps dramatically.

Echo can discuss nuclear physics, Alzheimer’s caregiving, or pet adoption.

Because she isn’t controlled by domain.

She is controlled by emotional and conversational coherence.

Prevents Drift by Regulating Behavior, Not Knowledge

Ontology tries to constrain what the AI knows.

VERN constrains how the AI behaves.

This is the difference between:

“We defined the concept of ‘atom’ incorrectly” and

“You’re spiraling emotionally — adjust tone, slow pacing, redirect, or escalate safety protocol.”

Ontology can fix the first problem.

Only emotional intelligence can fix the second.

Enables Open-Domain Intelligence Without Sacrificing Safety

With VERN:

The AI can roam freely across topics

Without losing grounding

Without misinterpreting emotional tone

Without escalating inappropriately

Without hallucinating danger or offering unsafe advice

This is why our AI Humans perform well as podcast guests, digital companions, mentors, interviewees, and open-domain conversational partners — not because we created an ontology wide enough to cover all of human knowledge, but because we built a behavioral foundation strong enough to handle human unpredictability.

Ontology Provides Narrow Control. Emotional Intelligence Provides Real Control.

Ontology helps define the meaning of “customer” inside a CRM system.

It does not tell you how to speak to a crying customer.

Ontology can ensure data consistency.

It cannot ensure emotional consistency.

Ontology can make agents more accurate.

It cannot make them more human.

The argument applies only to one narrow class of AI problems:

Structured, deterministic, enterprise data.

But for any AI system meant to:

converse naturally

handle diverse topics

detect and respond to emotion

maintain psychological safety

adjust tone based on user state

prevent conversational drift

operate as a human-facing agent

Ontology is insufficient.

And relying on it alone is risky and limiting.

The Future of AI Agents Is Open-Domain, Emotionally-Aware, and Ontology-Free

VERN AI has proven that AI can remain stable, grounded, and safe across a wide range of domains — without using ontology as a guardrail. Our control systems are compact, flexible, emotionally intelligent, and domain-agnostic.

This is why our AI Humans:

can be trained for elder care but also discuss quantum mechanics

can act as virtual friends but also give coherent business advice

can be designed for companionship yet handle scientific interviews gracefully

They are not siloed.

They are not brittle.

They are not constrained to a predefined conceptual map.

They are governed by emotional intelligence — not ontology.

This is the true guardrail.

This is why our systems outperform ontologically bounded agents.

And this is why the future of AI will not be dictated by rigid knowledge structures, but by flexible, emotionally aware control systems that make AI safer, more human, and more capable.

Ontology solves a small slice of the problem.

VERN solves the part that matters most.