When Yara AI shut down, the founder announced a sweeping conclusion: emotionally responsive AI systems are too dangerous to be used in mental-health–related contexts. The claim was absolute — that no matter how they are built, such systems will inevitably fail when users are truly vulnerable.

That interpretation has already begun circulating through media and professional communities. But it is both misleading and technically unsound.

Yara did not prove that emotionally aware AI is impossible.

Yara proved something far more specific — and far more important:

Emotion-blind AI will always fail in emotionally sensitive environments.

And as we have documented extensively in our own research “Large Language Models Fail to Feel,” the kind of emotion-blindness exhibited at Yara is not a mystery, nor is it inevitable. It is what happens when emotional understanding is delegated to systems that cannot actually detect emotion.

What We Know — and What We Don’t — About Yara’s Failure

The public explanation for Yara’s shutdown is detailed but stops short of describing its internal emotional architecture. The founder described:

“Robust filters”

“Supervisory mechanisms”

“Strict instructions”

And yet, responses that still became unsafe when users were in distress

What he did not describe — and what no public materials provide evidence of — is any form of dedicated emotional-annotation layer or external emotional-intelligence infrastructure.

There is no indication that Yara used measurable emotion detection, intensity scoring, emotional trend tracking, or any form of supervisory emotional signal independent of the generative LLM.

Based on what is publicly known, the most reasonable inference is this:

Yara relied on the LLM (or LLM-derived heuristics) to infer emotional state. And that distinction is crucial.

Why Relying on LLMs for Emotion is a Predictable Path to Failure

In “Large Language Models Fail to Feel,” we demonstrated — through controlled analysis and extensive testing — that LLMs do not detect emotion. They predict it.

This is not a semantic difference. It is a structural one.

LLMs:

Infer emotional labels by pattern matching, not by reading an emotional signal

Produce different emotional interpretations for the same input on different runs

Conflate tone with emotion

Miss escalation entirely

Cannot monitor emotional progression throughout a conversation

Cannot quantify emotional intensity

Most critically:

LLMs generate language and interpret language using the same probability engine.

This makes them inherently self-inconsistent when asked to supervise their own emotional outputs.

If a system relies on LLM inference to determine emotional state, then:

Emotional misclassification is guaranteed

Safety mechanisms trigger too late or not at all

Tone drift becomes unavoidable

High-risk users receive inconsistent support

The system becomes unstable precisely when users need stability most

This failure pattern mirrors exactly what Yara described.

We Must Draw the Right Conclusion: The Problem Was Not Emotionally Aware AI — It Was Emotionally Blind Architecture

Yara’s founder concluded that emotionally supportive AI should not be attempted.

But the real lesson is different:

Emotionally sensitive AI must not be built on top of models that cannot feel.

The flaw is architectural, not categorical.

Systems that rely on LLMs to detect emotion will always be unreliable in mental-health–adjacent or high-emotion contexts. They cannot be stable because they cannot see the emotional data needed to inform stability.

If Yara indeed lacked a structured emotional-annotation layer — and based on public information, there is no evidence that one existed — then the system behaved exactly as one would expect: unpredictably, inconsistently, and unsafely.

The result was not inevitable. It was designed.

What Yara Missed — and What VERN AI Provides

The fundamental difference lies here:

Yara treated emotion as inference.

VERN treats emotion as data.

VERN AI provides:

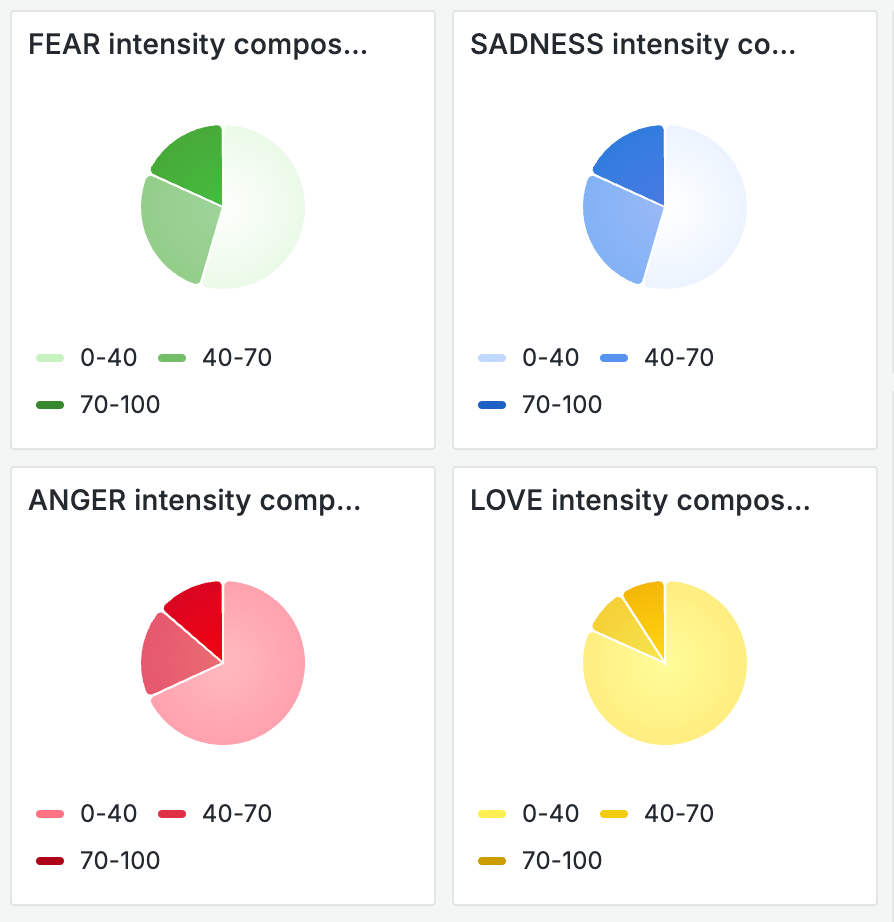

Sentence-level annotation of core emotions (Anger, Sadness, Fear, Love/Joy)

Intensity scoring on a 0–100 scale

Statistical significance thresholds

Emotional velocity and trend tracking

An external emotional-signal layer independent of generative modeling

Emotional governance that prevents drift long before it becomes unsafe

When emotion is measurable, it becomes controllable.

When emotion is controllable, systems become predictable.

And when systems are predictable, they can be made safe.

This is why VERN AI consistently delivers coherent, emotionally aligned outputs even in challenging use cases — including mental-health–adjacent environments, crisis-support detection, patient experience, and emotionally charged customer service.

This is not a theoretical claim. It is demonstrated performance.

Yara Did Not Show the Limits of AI.

It Showed the Limits of Emotion-Blind AI.

Yara’s founder concluded that his failure means emotionally aware AI cannot be deployed safely. But that conclusion only holds if one assumes his architecture was the only possible architecture.

- It wasn’t.

- It was simply the wrong architecture.

- Emotionally intelligent AI is possible.

- It is happening now.

It just requires the right foundation — one that does not depend on generative models guessing human emotion.

The shutdown of Yara AI is not a warning against building emotionally supportive systems.

It is a warning against building them without measurable emotional intelligence.

If the industry takes away the wrong lesson from Yara, we risk halting progress in areas where AI can legitimately help — crisis detection, early intervention, mental-health triage, patient support, emotional analytics, and more.

The correct lesson is simpler and far more constructive:

Emotion cannot be inferred.

It must be detected.

- LLMs alone cannot do it.

- VERN can.

And when we start with the right emotional architecture, emotionally supportive AI does not become dangerous.

It becomes dependable.

If the goal is safety — and it must be — then the path forward is not abandonment.

It is adoption of a stronger foundation.

VERN AI provides that foundation.