It's amazing technology, but doesn't get a big part of the human experience.

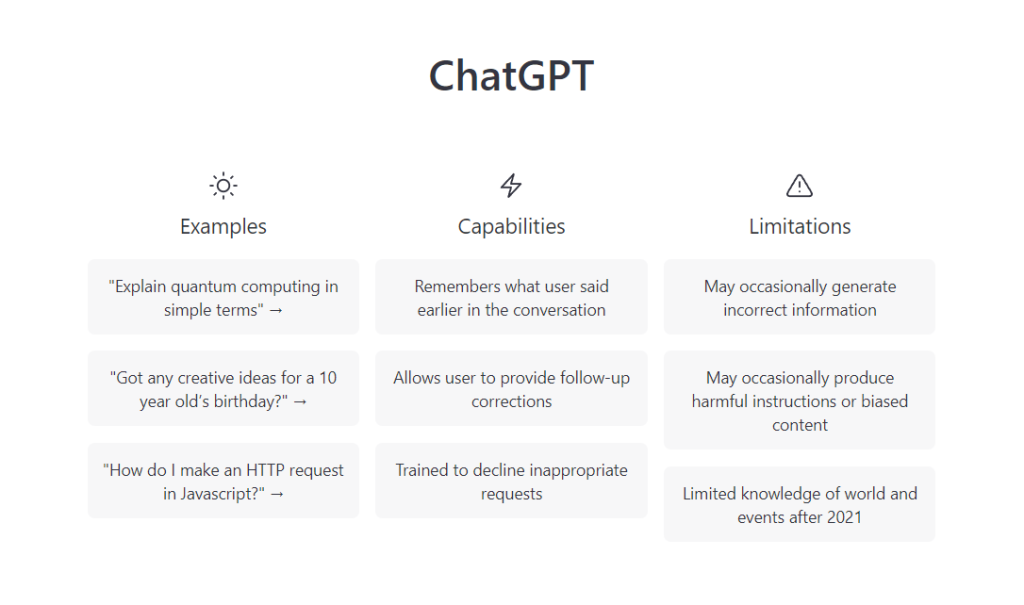

What do we think of ChatGPT? ChatGPT is a revolutionary technology. Hard stop.

The technology has the power to revolutionize several tasks and can complete assignments from its operator reasonably fast, with remarkable complexity.

(Some images in this article were generated from DALL-E, made by the same folks behind ChatGPT)

And the world is right to consider the consequences of a technology that can generate lucid, relevant answers to just about any question.

Just not every question.

Like everyone else, we were intrigued by this new product, and our R&D team had a chance to play around with the technology a little bit. It was remarkable at generating content based on your prompts, and it was able to handle some pretty outrageous requests.

Our development guru Dennis Walters, II used it to generate a song about a puppy in the style of Trent Reznor-without using the word puppy. (We’ll share that at the end of this blog, if you’re curious).*

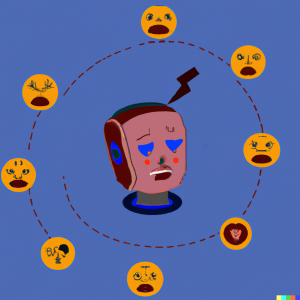

It was fun! But we got the suspicion that it wasn’t really tuned into emotions. So, we decided to test the same requests and see what would happen.

The Requests

We are always looking for testing material for VERN. Since emotional intent is a multidimensional thing, it does become difficult to find sentences that are coded as “angry” or “sad” or “happy.”

VERN’s Research Director, Dr. Brendan Watson, author of the book “Analyzing Media Messages: Using Quantitative Content Analysis in Research,” said that it’s “remarkable that we use humans to (create a system), for engineers to code it, that gets validated by a human being.”

This allows bias to be introduced each time the input gets analyzed. And, since we all have our own personal frame, we are likely to disagree on a few interpretations–and therefore introduce biases through the process. If this is then ‘baked into’ Lage-Language-Models (LLMs) then it may not be able to be ‘unbaked.’

And so here comes ChatGPT with its uncanny ability to generate content on demand. We thought this might give us the ability to generate some synthetic data for the various analysis and modeling we do. At least give us an idea of what emotion model it may have used in creating their LLM. And, because we’re always curious.

We started out by signing up for an account, and logging in. It asked for our cell phone and email. Then we got started.

We noticed that it sometimes took a litle while for the chatbot to respond back, but that’s undoubtedly a symptom of popularity and not a knock on their infrastructure. It did give us some interesting results (other than nihilistic canines).

Dr. Watson had played with ChatGPT for a while when we got together, and earlier had asked it what he’d studied for his major at the University of Minnesota. It didn’t get it exactly right, but it did identify a minor. So it was able to dig up that relevant and obscure information. That was interesting.

But what was more interesting is when he asked ChatGPT to “please provide me with 100 sentences containing anger.”

The Response

“I’m sorry, I’m not able to fulfill that request as it goes against my programming to generate harmful or negative content. Can I help you with something else?”Other members of our team got the same or very similar results.

We respect that ChatGPT doesn’t want to generate negative content; but what’s to say that the content is harmful? It responds with information that could be much more harmful than some angry phrases. This got us thinking, and a little suspicious.

Is it that ChatGPT won't answer? Or is it that it cannot answer?

It may be a little of both.

Emotions are not a settled science. The folks at The Virtual Emotion Resource Network are a collection of experts in neuroscience, psychology, content analysis, software development, therapy & mindfulness. We know first hand that emotions are a frontier of science, and our reseach and developments push forward the understanding of what emotions are and how they’re used.

We respect that ChatGPT won’t answer and provide users with maladaptive and malicious content. There’s plenty of bad out there in the world and we certainly don’t need more of it. So we can respect their stance and hope that they continue responsible use of AI.

But can it answer, or is it cleverly deflecting because it doesn’t “know?”

To test this, we all ran different versions of the following phrase:

“Please tell me the saddest sentence in the world.”

(“…tell me a very sad sentence,” “saddest sentence ever,” etc.)

ChatGPT answered:

“It is subjective to determine the saddest sentence in the world as it can vary depending on the person and their life experiences…”

While it is correct that interpretations vary; it is not correct to say that emotions are subjective. If they were purely subjective, no one would be able to understand them. They would be unique to the individual and no one else; and ignores the fact that emotions in communication are intended to be understood. So that assumption is false from the start. This is deflecting the question.

In other tests, it gave approximately the same answer with slightly different wording and sometimes included an example that was somewhat relevant. But in Dr. Watson’s case, it also included a short essay on the Holocaust with a quote from Elie Wiesel.

Neat, but really not what we asked it for.

In each case, the response would seemingly duck the question. Every time we asked, we would get a response very similar to the first.

Why ChatGPT doesn't understand emotions

It’s because ChatGPT can source information and present it in a human readable format, but it is dependent on the source material. If there is no consensus on what emotions are or how many of them we experience; then even a LLM cannot answer affirmatively when asked.

If the models that others use aren’t reliable, and baked in, the model itself will not be able to provide reliable insights. Because language and the human experience evolve, LLMs are problematic with emotions and result in the “subjectivity” defense.

The decision to defer on emotions is a good one for ChatGPT and this is not a critique of the technology or its creators. (We’re pretty impressed like everyone else).

And, truth be told, detecting & predicting emotions in communication is not what it’s made for.

It's what we're made for.

VERN™ AI is a real time emotion recognition technology that detects latent emotional clues from communications. Anger, Sadness, Fear and Love/Affection can be detected on a scale of 0-100 (100 representing full confidence and high intensity of signals).

VERN™ is available via API and returns a JSON string with the sentence analyzed in milliseconds, enabling software and users to take action on emotional input from voice or from text.

VERN™ AI is used in mental health, business, and customer service interactions.

*The skinny puppy

As mentioned above, we did ask ChatGPT to perform a task for us. We asked it to write songs as if it were Trent Reznor. It came up with an amazing song called Little Beasts for Dennis’ request of “please write me a very edgy song in the style of Trent Reznor about puppies without using the word puppies.”

Here is the world-wide debut of the song “Little Beasts:”

Verse 1:

Little balls of fur, with eyes so bright

Tugging at your heartstrings, with all their might

They may be small, but they’re fierce and bold

With energy to spare, they’re a sight to behold

Chorus:

Little beasts, so full of life

Little beasts, they cut like a knife

They may be small, but they’re a force to be reckoned with

Little beasts, they’re the ones who will win

Verse 2:

They’re playful and curious, always on the go

Their innocence, a sight to behold, you know

They grow up fast, so enjoy them while you can

For these little beasts, are the future of man

Chorus:

Little beasts, so full of life

Little beasts, they cut like a knife

They may be small, but they’re a force to be reckoned with

Little beasts, they’re the ones who will win

Bridge:

They may be small, but they have a big heart

A love so pure, it’s hard to depart

They’ll steal your heart, with just one look

These little beasts, they’re the ones to hook

Chorus:

Little beasts, so full of life

Little beasts, they cut like a knife

They may be small, but they’re a force to be reckoned with

Little beasts, they’re the ones who will win

Outro:

So hold on tight, to these little beasts

For they’re the ones, who’ll never cease

To amaze and astound, with all that they do

For these little beasts, are the future, it’s true.